Embodied Hands:

Modeling and Capturing Hands and Bodies Together

Javier Romero*, Dimitrios Tzionas* and Michael J Black

SIGGRAPH ASIA 2017, BANGKOK, THAILAND

Abstract

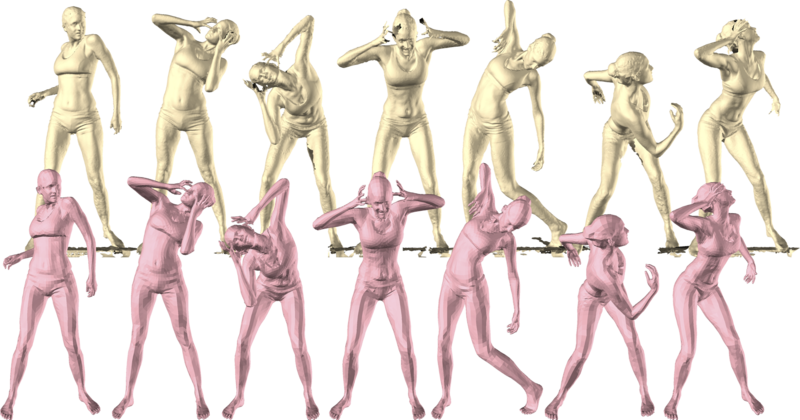

Humans move their hands and bodies together to communicate and solve tasks. Capturing and replicating such coordinated activity is critical for virtual characters that behave realistically. Surprisingly, most methods treat the 3D modeling and tracking of bodies and hands separately. Here we formulate a model of hands and bodies interacting together and fit it to full-body 4D sequences. When scanning or capturing the full body in 3D, hands are small and often partially occluded, making their shape and pose hard to recover. To cope with low-resolution, occlusion, and noise, we develop a new model called MANO (hand Model with Articulated and Non-rigid defOrmations). MANO is learned from around 1000 high-resolution 3D scans of hands of 31 subjects in a wide variety of hand poses. The model is realistic, low-dimensional, captures non-rigid shape changes with pose, is compatible with standard graphics packages, and can fit any human hand. MANO provides a compact mapping from hand poses to pose blend shape corrections and a linear manifold of pose synergies. We attach MANO to a standard parameterized 3D body shape model (SMPL), resulting in a fully articulated body and hand model (SMPL+H). We illustrate SMPL+H by fitting complex, natural, activities of subjects captured with a 4D scanner. The fitting is fully automatic and results in full body models that move naturally with detailed hand motions and a realism not seen before in full body performance capture. The models and data are freely available for research purposes in our website (http://mano.is.tue.mpg.de).

Video

More Information

- pdf preprint

- MANO Project page at MPI:IS

- Data, models and sample code can be downloaded by signing up and agreeing with the license. In case you have already signed up, you can simply login.

News

Model/Code Versions:

v1.0 - 22 Nov 2017 - Initial model release. The shape space was not scaled.

v1.1 - 14 May 2018 - MANO PKL files changed after scaling the shape space (shapedirs) for unit variance.

v1.2 - 16 Jan 2019 - No change in any model file. Some bugs in the code were solved and a 3D viewer was added for visualization.

Nov 2021: We now support conversion between all the models in the SMPL family, i.e. SMPL, SMPL+H, SMPL-X. https://github.com/vchoutas/smplx/tree/master/transfer_model

Nov 2019: We uploaded MANO fits for the HCI dataset of Tzionas et al. IJCV'16, used in Hasson et al. ICCV'19.

Referencing the Dataset

Here are the Bibtex snippets for citing MPI MANO in your work.

@article{MANO:SIGGRAPHASIA:2017,

title = {Embodied Hands: Modeling and Capturing Hands and Bodies Together},

author = {Romero, Javier and Tzionas, Dimitrios and Black, Michael J.},

journal = {ACM Transactions on Graphics, (Proc. SIGGRAPH Asia)},

volume = {36},

number = {6},

series = {245:1--245:17},

month = nov,

year = {2017},

month_numeric = {11}

}

Papers that build on MANO

Below is a non-exclusive list (continously updated) of papers that build on MANO - This is a MANO-focused list. A much more exhaustive list is the awesome hand pose estimation list on github (with which we would not like to compete in any way).

In case we have missed your work, please feel free to contact us, with a brief description of your work (2-3 sentences, e.g. input, output, and nugget).

Please take into account that we add only works that:

- present a new dataset with MANO fits to images or other modalities,

- or the target space of regression is the MANO model space (this is different from model-free mesh regression)

- and the data/models are already available to the public.

Inference: RGB-to-MANO (regression based)

- Yang et al., OakInk, CVPR 2022

- Yang et al., CPF, ICCV 2021

- Hasson et al. ObMan, CVPR 2019

- Boukhayma et al., 3D Hand Shape and Pose from Images in the Wild, CVPR 2019

- Zhou et al., Monocular Real-time Hand Shape and Motion Capture using Multi-modal Data, CVPR 2020

- Hasson et al., Leveraging Photometric Consistency over Time for Sparsely Supervised Hand-Object Reconstruction, CVPR 2020

- Choutas et al. ExPose, ECCV 2020

- Wang et al., RGB2Hands, SIGGRAPH Asia 2020

- Corona et al., GanHand, CVPR 2020

Inference: RGB-to-MANO (optimization based)

- Zhang et al., InteractionFusion, TOG 2019.

- Mueller et al., TwoHands, SIGGRAPH 2019

Datasets that use MANO

- DART, NeurIPS 2022 Datasets and Benchmarks Track

- InterHand2.6M, ECCV 2020

- HANDS ICCV 2019 challenge (includes parts of HO-3D, as well as BigHand2.2M and F-PHAB that are updated with MANO fits)

- Hasson et al. ObMan, CVPR 2019

- Zimmermann et al., FreiHand dataset, ICCV 2020

- Hampali et al., HO-3D, CVPR 2020

- Kulon et al., YouTube 3D Hands, CVPR 2020

- Tzionas et al., HIC, IJCV 2016 (updated with MANO fits)

- Brahmbhatt et al., ContactPose, ECCV 2020

- Moon et al., InterHand2.6M, ECCV 2020

- Corona et al., GanHand, CVPR 2020

- Taheri et al. GRAB, ECCV 2020

Expressive human models that employ MANO & SMPL+H

- Pavlakos et al., SMPL-X, CVPR 2020

Extending MANO with an appearance model

- Qian et al., HTML, ECCV 2020